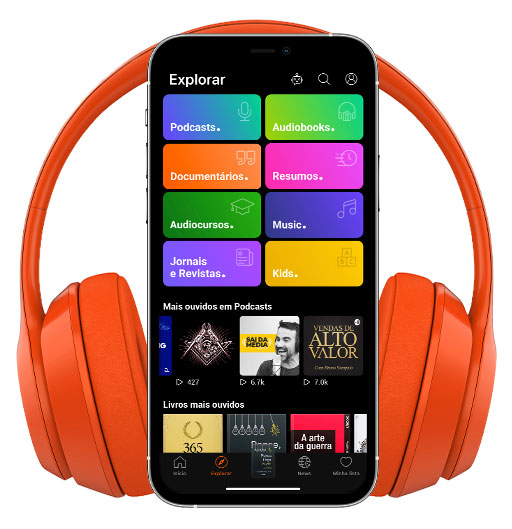

The Future Of Life

- Autor: Vários

- Narrador: Vários

- Editor: Podcast

- Duración: 221:00:33

- Mas informaciones

Informações:

Sinopsis

FLI catalyzes and supports research and initiatives for safeguarding life and developing optimistic visions of the future, including positive ways for humanity to steer its own course considering new technologies and challenges.Among our objectives is to inspire discussion and a sharing of ideas. As such, we interview researchers and thought leaders who we believe will help spur discussion within our community. The interviews do not necessarily represent FLIs opinions or views.

Episodios

-

Imagine A World: What if global challenges led to more centralization?

12/09/2023 Duración: 01h28sWhat if we had one advanced AI system for the entire world? Would this led to a world 'beyond' nation states - and do we want this? Imagine a World is a podcast exploring a range of plausible and positive futures with advanced AI, produced by the Future of Life Institute. We interview the creators of 8 diverse and thought provoking imagined futures that we received as part of the worldbuilding contest FLI ran last year. In the third episode of Imagine A World, we explore the fictional worldbuild titled 'Core Central'. How does a team of seven academics agree on one cohesive imagined world? That's a question the team behind 'Core Central', a second-place prizewinner in the FLI Worldbuilding Contest, had to figure out as they went along. In the end, this entry's realistic sense of multipolarity and messiness reflect positively its organic formulation. The team settled on one core, centralised AGI system as the governance model for their entire world. This eventually moves their world 'beyond' nation states.

-

Tom Davidson on How Quickly AI Could Automate the Economy

08/09/2023 Duración: 01h56minTom Davidson joins the podcast to discuss how AI could quickly automate most cognitive tasks, including AI research, and why this would be risky. Timestamps: 00:00 The current pace of AI 03:58 Near-term risks from AI 09:34 Historical analogies to AI 13:58 AI benchmarks VS economic impact 18:30 AI takeoff speed and bottlenecks 31:09 Tom's model of AI takeoff speed 36:21 How AI could automate AI research 41:49 Bottlenecks to AI automating AI hardware 46:15 How much of AI research is automated now? 48:26 From 20% to 100% automation 53:24 AI takeoff in 3 years 1:09:15 Economic impacts of fast AI takeoff 1:12:51 Bottlenecks slowing AI takeoff 1:20:06 Does the market predict a fast AI takeoff? 1:25:39 "Hard to avoid AGI by 2060" 1:27:22 Risks from AI over the next 20 years 1:31:43 AI progress without more compute 1:44:01 What if AI models fail safety evaluations? 1:45:33 Cybersecurity at AI companies 1:47:33 Will AI turn out well for humanity? 1:50:15 AI and board games

-

Imagine A World: What if we designed and built AI in an inclusive way?

05/09/2023 Duración: 52minHow does who is involved in the design of AI affect the possibilities for our future? Why isn’t the design of AI inclusive already? Can technology solve all our problems? Can human nature change? Do we want either of these things to happen? Imagine a World is a podcast exploring a range of plausible and positive futures with advanced AI, produced by the Future of Life Institute. We interview the creators of 8 diverse and thought provoking imagined futures that we received as part of the worldbuilding contest FLI ran last year In this second episode of Imagine A World we explore the fictional worldbuild titled 'Crossing Points', a second place entry in FLI's worldbuilding contest. Joining Guillaume Riesen on the Imagine a World podcast this time are two members of the Crossing Points team, Elaine Czech and Vanessa Hanschke, both academics at the University of Bristol. Elaine has a background in art and design, and is studying the accessibility of technologies for the elderly. Vanessa is studying responsible

-

Imagine A World: What if new governance mechanisms helped us coordinate?

05/09/2023 Duración: 01h02minAre today's democratic systems equipped well enough to create the best possible future for everyone? If they're not, what systems might work better? And are governments around the world taking the destabilizing threats of new technologies seriously enough, or will it take a dramatic event, such as an AI-driven war, to get their act together? Imagine a World is a podcast exploring a range of plausible and positive futures with advanced AI, produced by the Future of Life Institute. We interview the creators of 8 diverse and thought provoking imagined futures that we received as part of the worldbuilding contest FLI ran last year. In this first episode of Imagine A World we explore the fictional worldbuild titled 'Peace Through Prophecy'. Host Guillaume Riesen speaks to the makers of 'Peace Through Prophecy', a second place entry in FLI's Worldbuilding Contest. The worldbuild was created by Jackson Wagner, Diana Gurvich and Holly Oatley. In the episode, Jackson and Holly discuss just a few of the many ideas

-

New: Imagine A World Podcast [TRAILER]

29/08/2023 Duración: 02minComing Soon… The year is 2045. Humanity is not extinct, nor living in a dystopia. It has averted climate disaster and major wars. Instead, AI and other new technologies are helping to make the world more peaceful, happy and equal. How? This was what we asked the entrants of our Worldbuilding Contest to imagine last year. Our new podcast series digs deeper into the eight winning entries, their ideas and solutions, the diverse teams behind them and the challenges they faced. You might love some; others you might not choose to inhabit. FLI is not endorsing any one idea. Rather, we hope to grow the conversation about what futures people get excited about. Ask yourself, with each episode, is this a world you’d want to live in? And if not, what would you prefer? Don’t miss the first two episodes coming to your feed at the start of September! In the meantime, do explore the winning worlds, if you haven’t already: https://worldbuild.ai/

-

Robert Trager on International AI Governance and Cybersecurity at AI Companies

20/08/2023 Duración: 01h44minRobert Trager joins the podcast to discuss AI governance, the incentives of governments and companies, the track record of international regulation, the security dilemma in AI, cybersecurity at AI companies, and skepticism about AI governance. We also discuss Robert's forthcoming paper International Governance of Civilian AI: A Jurisdictional Certification Approach. You can read more about Robert's work at https://www.governance.ai Timestamps: 00:00 The goals of AI governance 08:38 Incentives of governments and companies 18:58 Benefits of regulatory diversity 28:50 The track record of anticipatory regulation 37:55 The security dilemma in AI 46:20 Offense-defense balance in AI 53:27 Failure rates and international agreements 1:00:33 Verification of compliance 1:07:50 Controlling AI supply chains 1:13:47 Cybersecurity at AI companies 1:21:30 The jurisdictional certification approach 1:28:40 Objections to AI governance

-

Jason Crawford on Progress and Risks from AI

21/07/2023 Duración: 01h25minJason Crawford joins the podcast to discuss the history of progress, the future of economic growth, and the relationship between progress and risks from AI. You can read more about Jason's work at https://rootsofprogress.org Timestamps: 00:00 Eras of human progress 06:47 Flywheels of progress 17:56 Main causes of progress 21:01 Progress and risk 32:49 Safety as part of progress 45:20 Slowing down specific technologies? 52:29 Four lenses on AI risk 58:48 Analogies causing disagreement 1:00:54 Solutionism about AI 1:10:43 Insurance, subsidies, and bug bounties for AI risk 1:13:24 How is AI different from other technologies? 1:15:54 Future scenarios of economic growth

-

Special: Jaan Tallinn on Pausing Giant AI Experiments

06/07/2023 Duración: 01h41minOn this special episode of the podcast, Jaan Tallinn talks with Nathan Labenz about Jaan's model of AI risk, the future of AI development, and pausing giant AI experiments. Timestamps: 0:00 Nathan introduces Jaan 4:22 AI safety and Future of Life Institute 5:55 Jaan's first meeting with Eliezer Yudkowsky 12:04 Future of AI evolution 14:58 Jaan's investments in AI companies 23:06 The emerging danger paradigm 26:53 Economic transformation with AI 32:31 AI supervising itself 34:06 Language models and validation 38:49 Lack of insight into evolutionary selection process 41:56 Current estimate for life-ending catastrophe 44:52 Inverse scaling law 53:03 Our luck given the softness of language models 55:07 Future of language models 59:43 The Moore's law of mad science 1:01:45 GPT-5 type project 1:07:43 The AI race dynamics 1:09:43 AI alignment with the latest models 1:13:14 AI research investment and safety 1:19:43 What a six-month pause buys us 1:25:44 AI passing the Turing Test 1:28:16 AI safety and risk 1:32

-

Joe Carlsmith on How We Change Our Minds About AI Risk

22/06/2023 Duración: 02h24minJoe Carlsmith joins the podcast to discuss how we change our minds about AI risk, gut feelings versus abstract models, and what to do if transformative AI is coming soon. You can read more about Joe's work at https://joecarlsmith.com. Timestamps: 00:00 Predictable updating on AI risk 07:27 Abstract models versus gut feelings 22:06 How Joe began believing in AI risk 29:06 Is AI risk falsifiable? 35:39 Types of skepticisms about AI risk 44:51 Are we fundamentally confused? 53:35 Becoming alienated from ourselves? 1:00:12 What will change people's minds? 1:12:34 Outline of different futures 1:20:43 Humanity losing touch with reality 1:27:14 Can we understand AI sentience? 1:36:31 Distinguishing real from fake sentience 1:39:54 AI doomer epistemology 1:45:23 AI benchmarks versus real-world AI 1:53:00 AI improving AI research and development 2:01:08 What if transformative AI comes soon? 2:07:21 AI safety if transformative AI comes soon 2:16:52 AI systems interpreting other AI systems 2:19:38 Phil

-

Dan Hendrycks on Why Evolution Favors AIs over Humans

08/06/2023 Duración: 02h26minDan Hendrycks joins the podcast to discuss evolutionary dynamics in AI development and how we could develop AI safely. You can read more about Dan's work at https://www.safe.ai Timestamps: 00:00 Corporate AI race 06:28 Evolutionary dynamics in AI 25:26 Why evolution applies to AI 50:58 Deceptive AI 1:06:04 Competition erodes safety 10:17:40 Evolutionary fitness: humans versus AI 1:26:32 Different paradigms of AI risk 1:42:57 Interpreting AI systems 1:58:03 Honest AI and uncertain AI 2:06:52 Empirical and conceptual work 2:12:16 Losing touch with reality Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Roman Yampolskiy on Objections to AI Safety

26/05/2023 Duración: 01h42minRoman Yampolskiy joins the podcast to discuss various objections to AI safety, impossibility results for AI, and how much risk civilization should accept from emerging technologies. You can read more about Roman's work at http://cecs.louisville.edu/ry/ Timestamps: 00:00 Objections to AI safety 15:06 Will robots make AI risks salient? 27:51 Was early AI safety research useful? 37:28 Impossibility results for AI 47:25 How much risk should we accept? 1:01:21 Exponential or S-curve? 1:12:27 Will AI accidents increase? 1:23:56 Will we know who was right about AI? 1:33:33 Difference between AI output and AI model Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Nathan Labenz on How AI Will Transform the Economy

11/05/2023 Duración: 01h06minNathan Labenz joins the podcast to discuss the economic effects of AI on growth, productivity, and employment. We also talk about whether AI might have catastrophic effects on the world. You can read more about Nathan's work at https://www.cognitiverevolution.ai Timestamps: 00:00 Economic transformation from AI 11:15 Productivity increases from technology 17:44 AI effects on employment 28:43 Life without jobs 38:42 Losing contact with reality 42:31 Catastrophic risks from AI 53:52 Scaling AI training runs 1:02:39 Stable opinions on AI? Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Nathan Labenz on the Cognitive Revolution, Red Teaming GPT-4, and Potential Dangers of AI

04/05/2023 Duración: 59minNathan Labenz joins the podcast to discuss the cognitive revolution, his experience red teaming GPT-4, and the potential near-term dangers of AI. You can read more about Nathan's work at https://www.cognitiverevolution.ai Timestamps: 00:00 The cognitive revolution 07:47 Red teaming GPT-4 24:00 Coming to believe in transformative AI 30:14 Is AI depth or breadth most impressive? 42:52 Potential near-term dangers from AI Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Maryanna Saenko on Venture Capital, Philanthropy, and Ethical Technology

27/04/2023 Duración: 01h17minMaryanna Saenko joins the podcast to discuss how venture capital works, how to fund innovation, and what the fields of investing and philanthropy could learn from each other. You can read more about Maryanna's work at https://future.ventures Timestamps: 00:00 How does venture capital work? 09:01 Failure and success for startups 13:22 Is overconfidence necessary? 19:20 Repeat entrepreneurs 24:38 Long-term investing 30:36 Feedback loops from investments 35:05 Timing investments 38:35 The hardware-software dichotomy 42:19 Innovation prizes 45:43 VC lessons for philanthropy 51:03 Creating new markets 54:01 Investing versus philanthropy 56:14 Technology preying on human frailty 1:00:55 Are good ideas getting harder to find? 1:06:17 Artificial intelligence 1:12:41 Funding ethics research 1:14:25 Is philosophy useful? Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www

-

Connor Leahy on the State of AI and Alignment Research

20/04/2023 Duración: 52minConnor Leahy joins the podcast to discuss the state of the AI. Which labs are in front? Which alignment solutions might work? How will the public react to more capable AI? You can read more about Connor's work at https://conjecture.dev Timestamps: 00:00 Landscape of AI research labs 10:13 Is AGI a useful term? 13:31 AI predictions 17:56 Reinforcement learning from human feedback 29:53 Mechanistic interpretability 33:37 Yudkowsky and Christiano 41:39 Cognitive Emulations 43:11 Public reactions to AI Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Connor Leahy on AGI and Cognitive Emulation

13/04/2023 Duración: 01h36minConnor Leahy joins the podcast to discuss GPT-4, magic, cognitive emulation, demand for human-like AI, and aligning superintelligence. You can read more about Connor's work at https://conjecture.dev Timestamps: 00:00 GPT-4 16:35 "Magic" in machine learning 27:43 Cognitive emulations 38:00 Machine learning VS explainability 48:00 Human data = human AI? 1:00:07 Analogies for cognitive emulations 1:26:03 Demand for human-like AI 1:31:50 Aligning superintelligence Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Lennart Heim on Compute Governance

06/04/2023 Duración: 50minLennart Heim joins the podcast to discuss options for governing the compute used by AI labs and potential problems with this approach to AI safety. You can read more about Lennart's work here: https://heim.xyz/about/ Timestamps: 00:00 Introduction 00:37 AI risk 03:33 Why focus on compute? 11:27 Monitoring compute 20:30 Restricting compute 26:54 Subsidising compute 34:00 Compute as a bottleneck 38:41 US and China 42:14 Unintended consequences 46:50 Will AI be like nuclear energy? Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Lennart Heim on the AI Triad: Compute, Data, and Algorithms

30/03/2023 Duración: 47minLennart Heim joins the podcast to discuss how we can forecast AI progress by researching AI hardware. You can read more about Lennart's work here: https://heim.xyz/about/ Timestamps: 00:00 Introduction 01:00 The AI triad 06:26 Modern chip production 15:54 Forecasting AI with compute 27:18 Running out of data? 32:37 Three eras of AI training 37:58 Next chip paradigm 44:21 AI takeoff speeds Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Liv Boeree on Poker, GPT-4, and the Future of AI

23/03/2023 Duración: 51minLiv Boeree joins the podcast to discuss poker, GPT-4, human-AI interaction, whether this is the most important century, and building a dataset of human wisdom. You can read more about Liv's work here: https://livboeree.com Timestamps: 00:00 Introduction 00:36 AI in Poker 09:35 Game-playing AI 13:45 GPT-4 and generative AI 26:41 Human-AI interaction 32:05 AI arms race risks 39:32 Most important century? 42:36 Diminishing returns to intelligence? 49:14 Dataset of human wisdom/meaning Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

-

Liv Boeree on Moloch, Beauty Filters, Game Theory, Institutions, and AI

16/03/2023 Duración: 42minLiv Boeree joins the podcast to discuss Moloch, beauty filters, game theory, institutional change, and artificial intelligence. You can read more about Liv's work here: https://livboeree.com Timestamps: 00:00 Introduction 01:57 What is Moloch? 04:13 Beauty filters 10:06 Science citations 15:18 Resisting Moloch 20:51 New institutions 26:02 Moloch and WinWin 28:41 Changing systems 33:37 Artificial intelligence 39:14 AI acceleration Social Media Links: ➡️ WEBSITE: https://futureoflife.org ➡️ TWITTER: https://twitter.com/FLIxrisk ➡️ INSTAGRAM: https://www.instagram.com/futureoflifeinstitute/ ➡️ META: https://www.facebook.com/futureoflifeinstitute ➡️ LINKEDIN: https://www.linkedin.com/company/future-of-life-institute/

![New: Imagine A World Podcast [TRAILER]](http://media3.ubook.com/catalog/book-cover-image/685390/200x200/761C74E4-C623-A49C-E0B6-988A67320A69.jpg)