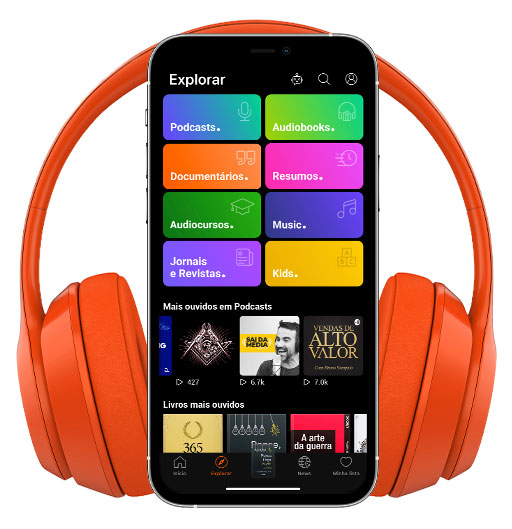

The Future Of Life

- Autor: Vários

- Narrador: Vários

- Editor: Podcast

- Duración: 222:45:53

- Mas informaciones

Informações:

Sinopsis

FLI catalyzes and supports research and initiatives for safeguarding life and developing optimistic visions of the future, including positive ways for humanity to steer its own course considering new technologies and challenges.Among our objectives is to inspire discussion and a sharing of ideas. As such, we interview researchers and thought leaders who we believe will help spur discussion within our community. The interviews do not necessarily represent FLIs opinions or views.

Episodios

-

AIAP: Inverse Reinforcement Learning and Inferring Human Preferences with Dylan Hadfield-Menell

25/04/2018 Duración: 01h25minInverse Reinforcement Learning and Inferring Human Preferences is the first podcast in the new AI Alignment series, hosted by Lucas Perry. This series will be covering and exploring the AI alignment problem across a large variety of domains, reflecting the fundamentally interdisciplinary nature of AI alignment. Broadly, we will be having discussions with technical and non-technical researchers across a variety of areas, such as machine learning, AI safety, governance, coordination, ethics, philosophy, and psychology as they pertain to the project of creating beneficial AI. If this sounds interesting to you, we will hope that you join in the conversations by following or subscribing to us on Youtube, Soundcloud, or your preferred podcast site/application. In this podcast, Lucas spoke with Dylan Hadfield-Menell, a fifth year Ph.D student at UC Berkeley. Dylan’s research focuses on the value alignment problem in artificial intelligence. He is ultimately concerned with designing algorithms that can learn about a

-

Navigating AI Safety -- From Malicious Use to Accidents

30/03/2018 Duración: 58minIs the malicious use of artificial intelligence inevitable? If the history of technological progress has taught us anything, it's that every "beneficial" technological breakthrough can be used to cause harm. How can we keep bad actors from using otherwise beneficial AI technology to hurt others? How can we ensure that AI technology is designed thoughtfully to prevent accidental harm or misuse? On this month's podcast, Ariel spoke with FLI co-founder Victoria Krakovna and Shahar Avin from the Center for the Study of Existential Risk (CSER). They talk about CSER's recent report on forecasting, preventing, and mitigating the malicious uses of AI, along with the many efforts to ensure safe and beneficial AI.

-

AI, Ethics And The Value Alignment Problem With Meia Chita-Tegmark And Lucas Perry

28/02/2018 Duración: 49minWhat does it mean to create beneficial artificial intelligence? How can we expect to align AIs with human values if humans can't even agree on what we value? Building safe and beneficial AI involves tricky technical research problems, but it also requires input from philosophers, ethicists, and psychologists on these fundamental questions. How can we ensure the most effective collaboration? Ariel spoke with FLI's Meia Chita-Tegmark and Lucas Perry on this month's podcast about the value alignment problem: the challenge of aligning the goals and actions of AI systems with the goals and intentions of humans.

-

Top AI Breakthroughs and Challenges of 2017

31/01/2018 Duración: 30minAlphaZero, progress in meta-learning, the role of AI in fake news, the difficulty of developing fair machine learning -- 2017 was another year of big breakthroughs and big challenges for AI researchers! To discuss this more, we invited FLI's Richard Mallah and Chelsea Finn from UC Berkeley to join Ariel for this month's podcast. They talked about some of the progress they were most excited to see last year and what they're looking forward to in the coming year.

-

Beneficial AI And Existential Hope In 2018

21/12/2017 Duración: 37minFor most of us, 2017 has been a roller coaster, from increased nuclear threats to incredible advancements in AI to crazy news cycles. But while it’s easy to be discouraged by various news stories, we at FLI find ourselves hopeful that we can still create a bright future. In this episode, the FLI team discusses the past year and the momentum we've built, including: the Asilomar Principles, our 2018 AI safety grants competition, the recent Long Beach workshop on Value Alignment, and how we've honored one of civilization's greatest heroes.

-

Balancing the Risks of Future Technologies With Andrew Maynard and Jack Stilgoe

30/11/2017 Duración: 35minWhat does it means for technology to “get it right,” and why do tech companies ignore long-term risks in their research? How can we balance near-term and long-term AI risks? And as tech companies become increasingly powerful, how can we ensure that the public has a say in determining our collective future? To discuss how we can best prepare for societal risks, Ariel spoke with Andrew Maynard and Jack Stilgoe on this month’s podcast. Andrew directs the Risk Innovation Lab in the Arizona State University School for the Future of Innovation in Society, where his work focuses on exploring how emerging and converging technologies can be developed and used responsibly within an increasingly complex world. Jack is a senior lecturer in science and technology studies at University College London where he works on science and innovation policy with a particular interest in emerging technologies.

-

AI Ethics, the Trolley Problem, and a Twitter Ghost Story with Joshua Greene And Iyad Rahwan

31/10/2017 Duración: 45minAs technically challenging as it may be to develop safe and beneficial AI, this challenge also raises some thorny questions regarding ethics and morality, which are just as important to address before AI is too advanced. How do we teach machines to be moral when people can't even agree on what moral behavior is? And how do we help people deal with and benefit from the tremendous disruptive change that we anticipate from AI? To help consider these questions, Joshua Greene and Iyad Rawhan kindly agreed to join the podcast. Josh is a professor of psychology and member of the Center for Brain Science Faculty at Harvard University. Iyad is the AT&T Career Development Professor and an associate professor of Media Arts and Sciences at the MIT Media Lab.

-

80,000 Hours with Rob Wiblin and Brenton Mayer

29/09/2017 Duración: 58minIf you want to improve the world as much as possible, what should you do with your career? Should you become a doctor, an engineer or a politician? Should you try to end global poverty, climate change, or international conflict? These are the questions that the research group, 80,000 Hours tries to answer. They try to figure out how individuals can set themselves up to help as many people as possible in as big a way as possible. To learn more about their research, Ariel invited Rob Wiblin and Brenton Mayer of 80,000 Hours to the FLI podcast. In this podcast we discuss "earning to give", building career capital, the most effective ways for individuals to help solve the world's most pressing problems -- including artificial intelligence, nuclear weapons, biotechnology and climate change. If you're interested in tackling these problems, or simply want to learn more about them, this podcast is the perfect place to start.

-

Life 3.0: Being Human in the Age of Artificial Intelligence with Max Tegmark

29/08/2017 Duración: 34minElon Musk has called it a compelling guide to the challenges and choices in our quest for a great future of life on Earth and beyond, while Stephen Hawking and Ray Kurzweil have referred to it as an introduction and guide to the most important conversation of our time. “It” is Max Tegmark's new book, Life 3.0: Being Human in the Age of Artificial Intelligence. In this interview, Ariel speaks with Max about the future of artificial intelligence. What will happen when machines surpass humans at every task? Will superhuman artificial intelligence arrive in our lifetime? Can and should it be controlled, and if so, by whom? Can humanity survive in the age of AI? And if so, how can we find meaning and purpose if super-intelligent machines provide for all our needs and make all our contributions superfluous?

-

The Art Of Predicting With Anthony Aguirre And Andrew Critch

31/07/2017 Duración: 57minHow well can we predict the future? In this podcast, Ariel speaks with Anthony Aguirre and Andrew Critch about the art of predicting the future, what constitutes a good prediction, and how we can better predict the advancement of artificial intelligence. They also touch on the difference between predicting a solar eclipse and predicting the weather, what it takes to make money on the stock market, and the bystander effect regarding existential risks. Visit metaculus.com to try your hand at the art of predicting. Anthony is a professor of physics at the University of California at Santa Cruz. He's one of the founders of the Future of Life Institute, of the Foundational Questions Institute, and most recently of Metaculus.com, which is an online effort to crowdsource predictions about the future of science and technology. Andrew is on a two-year leave of absence from MIRI to work with UC Berkeley's Center for Human Compatible AI. He cofounded the Center for Applied Rationality, and previously worked as an algo

-

Banning Nuclear & Autonomous Weapons With Richard Moyes And Miriam Struyk

30/06/2017 Duración: 41minHow does a weapon go from one of the most feared to being banned? And what happens once the weapon is finally banned? To discuss these questions, Ariel spoke with Miriam Struyk and Richard Moyes on the podcast this month. Miriam is Programs Director at PAX. She played a leading role in the campaign banning cluster munitions and developed global campaigns to prohibit financial investments in producers of cluster munitions and nuclear weapons. Richard is the Managing Director of Article 36. He's worked closely with the International Campaign to Abolish Nuclear Weapons, he helped found the Campaign to Stop Killer Robots, and he coined the phrase “meaningful human control” regarding autonomous weapons.

-

Creative AI With Mark Riedl & Scientists Support A Nuclear Ban

01/06/2017 Duración: 43minThis is a special two-part podcast. First, Mark and Ariel discuss how AIs can use stories and creativity to understand and exhibit culture and ethics, while also gaining "common sense reasoning." They also discuss the “big red button” problem in AI safety research, the process of teaching "rationalization" to AIs, and computational creativity. Mark is an associate professor at the Georgia Tech School of interactive computing, where his recent work has focused on human-AI interaction and how humans and AI systems can understand each other. Then, we hear from scientists, politicians and concerned citizens about why they support the upcoming UN negotiations to ban nuclear weapons. Ariel interviewed a broad range of people over the past two months, and highlights are compiled here, including comments by Congresswoman Barbara Lee, Nobel Laureate Martin Chalfie, and FLI president Max Tegmark.

-

Climate Change With Brian Toon And Kevin Trenberth

27/04/2017 Duración: 47minI recently visited the National Center for Atmospheric Research in Boulder, CO and met with climate scientists Dr. Kevin Trenberth and CU Boulder’s Dr. Brian Toon to have a different climate discussion: not about whether climate change is real, but about what it is, what its effects could be, and how can we prepare for the future.

-

Law and Ethics of AI with Ryan Jenkins and Matt Scherer

31/03/2017 Duración: 58minThe rise of artificial intelligence presents not only technical challenges, but important legal and ethical challenges for society, especially regarding machines like autonomous weapons and self-driving cars. To discuss these issues, I interviewed Matt Scherer and Ryan Jenkins. Matt is an attorney and legal scholar whose scholarship focuses on the intersection between law and artificial intelligence. Ryan is an assistant professor of philosophy and a senior fellow at the Ethics and Emerging Sciences group at California Polytechnic State, where he studies the ethics of technology. In this podcast, we discuss accountability and transparency with autonomous systems, government regulation vs. self-regulation, fake news, and the future of autonomous systems.

-

UN Nuclear Weapons Ban With Beatrice Fihn And Susi Snyder

28/02/2017 Duración: 41minLast October, the United Nations passed a historic resolution to begin negotiations on a treaty to ban nuclear weapons. Previous nuclear treaties have included the Test Ban Treaty, and the Non-Proliferation Treaty. But in the 70 plus years of the United Nations, the countries have yet to agree on a treaty to completely ban nuclear weapons. The negotiations will begin this March. To discuss the importance of this event, I interviewed Beatrice Fihn and Susi Snyder. Beatrice is the Executive Director of the International Campaign to Abolish Nuclear Weapons, also known as ICAN, where she is leading a global campaign consisting of about 450 NGOs working together to prohibit nuclear weapons. Susi is the Nuclear Disarmament Program Manager for PAX in the Netherlands, and the principal author of the Don’t Bank on the Bomb series. She is an International Steering Group member of ICAN. (Edited by Tucker Davey.)

-

AI Breakthroughs With Ian Goodfellow And Richard Mallah

31/01/2017 Duración: 54min2016 saw some significant AI developments. To talk about the AI progress of the last year, we turned to Richard Mallah and Ian Goodfellow. Richard is the director of AI projects at FLI, he’s the Senior Advisor to multiple AI companies, and he created the highest-rated enterprise text analytics platform. Ian is a research scientist at OpenAI, he’s the lead author of a deep learning textbook, and he’s the inventor of Generative Adversarial Networks. Listen to the podcast here or review the transcript here.

-

FLI 2016 - A Year In Reivew

30/12/2016 Duración: 32minFLI's founders and core team -- Max Tegmark, Meia Chita-Tegmark, Anthony Aguirre, Victoria Krakovna, Richard Mallah, Lucas Perry, David Stanley, and Ariel Conn -- discuss the developments of 2016 they were most excited about, as well as why they're looking forward to 2017.

-

Heather Roff and Peter Asaro on Autonomous Weapons

30/11/2016 Duración: 34minDrs. Heather Roff and Peter Asaro, two experts in autonomous weapons, talk about their work to understand and define the role of autonomous weapons, the problems with autonomous weapons, and why the ethical issues surrounding autonomous weapons are so much more complicated than other AI systems.

-

Nuclear Winter With Alan Robock and Brian Toon

31/10/2016 Duración: 46minI recently sat down with Meteorologist Alan Robock from Rutgers University and physicist Brian Toon from the University of Colorado to discuss what is potentially the most devastating consequence of nuclear war: nuclear winter.

-

Robin Hanson On The Age Of Em

28/09/2016 Duración: 24minDr. Robin Hanson talks about the Age of Em, the future and evolution of humanity, and his research for his next book.